Resonant Attention

My first media optimizer test with Grey, back in the Mad Men era, taught us that you must use an “impact index” for each media alternative, or the whole process is GIGO. The optimizer just buys the cheapest stuff unless you give it an impact index.

Luckily, we almost had a concept of impact indices, because some clients insisted we use Starch Noting scores as impact indices in buying magazines.

Many years later, the industry is mostly still buying the cheapest stuff.

TRA (2005-2014) changed that to some degree. Mediavest used the TRA index of Heavy Swing Purchasers as its impact index. Fortunately, Mediaocean has a column for an impact index. Numerous TRA clients stood up at ARF conferences to confirm that bank account validation proved the TRA numbers were right – ROI did go up if you used the impact indices – including Kraft, Mars, Constellation, three anonymized MediaVest clients, GroupM, et al.

The driving need for impact indices today is because of digital. The need to filter for fraud and brand safety necessitated some kind of media placement scoring of ad impression quality.

The original impact indices were about degrees of goodness. The first digital era impact indices were about degrees of badness.

Next came viewability, which inspired around 30 companies to light up the Attention level in the ARF Model (which was originally called Perception, and Starch Noting scores were the only industry entity in that field at the time; Starch was based on interviewing).

The ARF Attention Study Phase 2 found little connection between attention and sales. Playground xyz, an attention supplier itself, showed proof at ARF AUDIENCExSCIENCE 2024 that attention does drive memory at the upper funnel but does not impact lower funnel. I suspect the Phase 3 results will be better.

Thirty excellent suppliers to choose from, for upper funnel one can rely upon attention as an impact index you definitely want to use, at least until something beats it. It is a far shrewder to use any impact index than to not use any. Buying cheaper is going to get poor results, which is why agencies are being forced to do more with less – which is also a losing strategy for brands.

But what about the lower funnel? That’s where thinking is focused today. We must use an impact index, and we must therefore use attention because it is available, but how do we increase the bottom funnel, the ROI?

You add Resonance to Attention. Resonance can be predicted. When you use the two together you will be covered at top and bottom of funnel. Seven objective third-party sales effect validations including ARF Cognition Council, Wharton Neuroscience, NCS, Simmons, et al. RMT, the first in the category, offers guarantees. (You know I’m Chairman of RMT.)

ARF’s Horst Stipp at an ARF annual conference in 2018 presented two types of context effects (impact indices) based on a meta-analysis of every major relevant study in the past 60-odd years. One type, which he called Attention, is based on the idea that certain program environments rivet you e.g. the Superbowl, The Oscars, etc., whereas some others might bore you to sleep. It is an attribute solely of the media.

The other type he called Alignment, is based on the idea that the more similar the ad to the program, the more likely there will be increased ad effectiveness. Kwon et al then did an even larger meta-analysis (both in the ARF Journal of Advertising Effectiveness) in which over 70 studies confirmed the Alignment effect works.

Horst’s prophecy came true. After that conference, the attention field sprung up and now resonance (alignment, congruence) is coming into the picture.

It’s looking from the evidence on sales effects that, to use Horst’s original terminology, Alignment has more leverage than Attention – the score that reflects both he ad and the context in relation to one another – is having more lower funnel effect than the score that reflects the attributes only of the media without considering the ad.

I come at it another way. How strong is the signal, I ask myself. If it’s a strong signal I’m going to want to use it. What if I have two signals, one stronger than the other? My answer is both – if I’m trying to get a prediction score (Adjusted R Squared) I’m better off retaining some of the other signals, not just using the one strongest metric.

Settling for the one strongest metric is more of that pound foolishness of buying the cheapest.

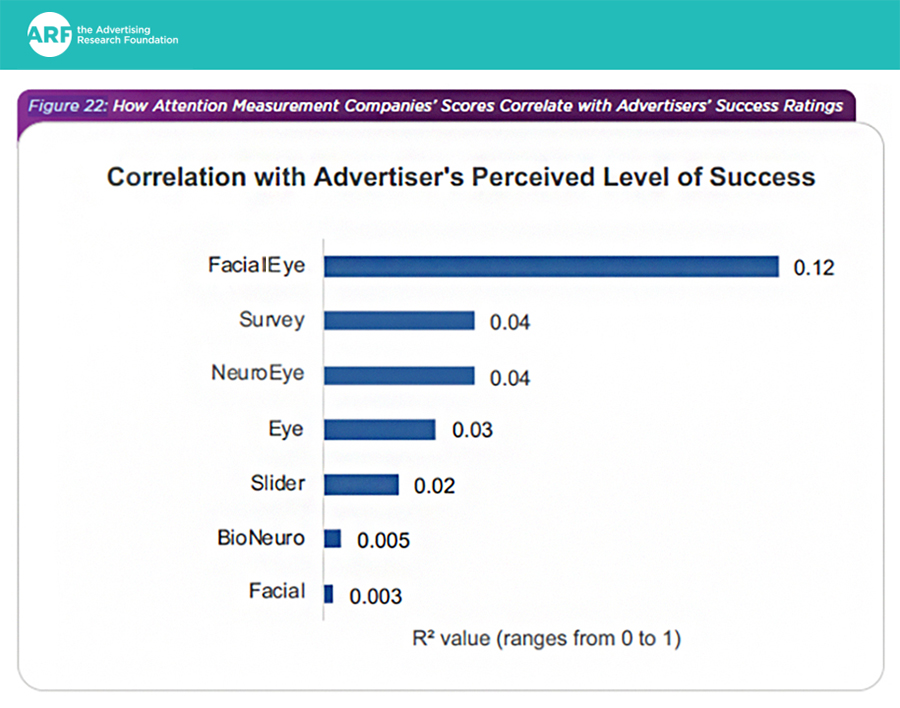

For example, this chart from the ARF Attention Study Phase 2 shows that combined techniques can be very strongly synergistic – The combination of facial emotion recognition and eye tracking has a prediction score of 0.12 – whereas facial emotion recognition on its own was 0.003, and eye tracking on its own was 0.03 – if those were merely additive, they would add to 0.033 – instead they add to a number that is 3.6X greater than that.

https://thearf.org/category/ua_resource/arf-attention-measurement-validation-initiative-phase-2-report-2nd-edition-2/

RMT is looking forward to offering Resonant Attention with attention suppliers, and already has announced collaboration with Amplified Intelligence, Chilmark, and Viomba. The Viomba combined product is already in market through our co-partner Semasio. And a neuro-enhanced version of it with partner Wharton Neuroscience has also been announced.

Together, the attention and resonance industry will bloom into the impact index industry – providing the Video Advertising Bureau and other purveyors of premium content with the Impression Quality dimension they rightly want so much. It will be Resonant Attention that they want.

Posted at MediaVillage through the Thought Leadership self-publishing platform.

Click the social buttons to share this story with colleagues and friends.

The opinions expressed here are the author's views and do not necessarily represent the views of MediaVillage.org/MyersBizNet.