Nielsen Proves that Accuracy Cannot Be Affordably Sacrificed

And other highlights from the ARF Accelerator Conference

The Advertising Research Foundation (ARF), in partnership with Sequent Partners, annual Marketing Analytics Accelerator Conference is aimed at helping the industry more quickly identify the best paths to producing and measuring business outcomes from advertising. They continue to do a great job.

At last week’s 2024 conference, Nielsen Senior Vice President Kristin Vento showed a most revealing slide, in her presentation with Adam Isselbacher, Senior Vice President, Group Director, Research & Analytics, IPG Mediabrands’ UM. Nielsen, far from their image among the uninitiated, is a leader in the use of big data, having 50 million households of big data, plus over 20 spine-to-spine integrations with major media platforms like Amazon, covering more than 3 out of 4 US households with census-level data (for some measurements where integrations are not in place yet, the proportion is lower). The panel, AI, streaming meter, common device correction, watermarks, wearable PPMs, and other methods are uniquely used by Nielsen to adjust the big data and thereby create currency.

The question I’ve always had is how different would the results be if Nielsen didn’t use all that other stuff to adjust the big data. What if they dropped all that other stuff and just reported the big data they have acquired?

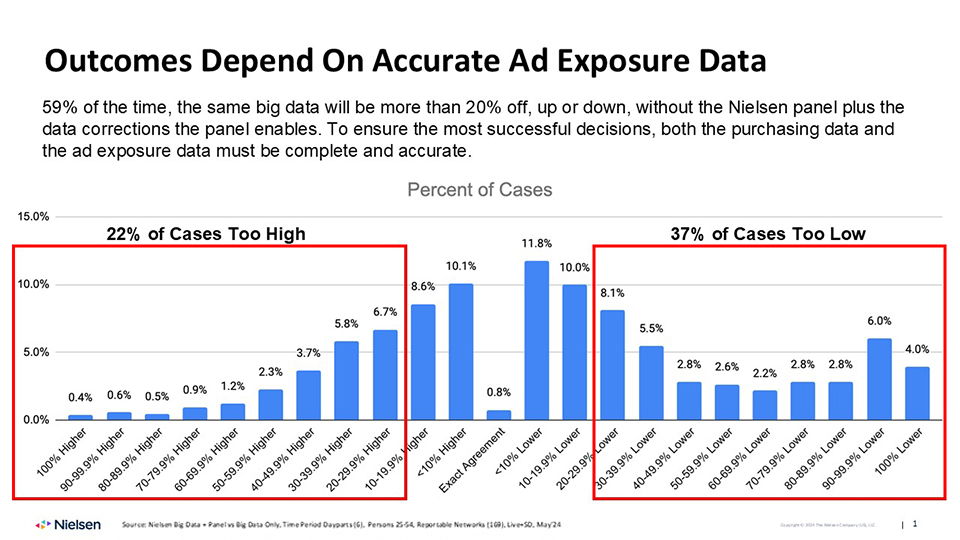

Kristin showed the slide below which compares the published final currency against what Nielsen would report from its own big data (acquired from others). What it shows is that 59% of the time the program rating would differ by more than 20% between the big data on its own vs. the big data after calibration by the Nielsen suite. Exact agreement occurs only 0.8% of the time. Coming within 10% only happens 22.7% of the time.

Although the Nielsen big data are the heart of the data, without calibration they are far off.

Kristin pointed out that these errors on the part of uncalibrated big data would carry over into outcomes measurement. Ad exposure data is the foundation of the house. If it is wrong, so will be the MMM, MTA, singlesource, random control trial, and any other outcome method. The outcome results used to make multimillion dollar decisions will be wrong. The decisions will be wrong. The wrongdoers will be punished. The brands will be ill-served.

Why is it that the big data on its own – with reweighting to offset demographic biases and the best of other methodologies for cleaning, editing, and projecting the data – is so far from the Nielsen currency? So much of the viewing is by streaming, and only Nielsen has a streaming meter. The smart TV data are purposely incomplete for cost reasons. There are many other causes but those are primary.

Kristin also reported from Nielsen’s annual marketing survey of advertisers that only about a third of advertisers are using measurement data in which TV and digital are both included.

Adam Isselbacher highlighted the critical role of maximizing cross-platform measurement precision to fully capture ROI variations across channels, brand categories, diverse audience segments, and markets. Isselbacher emphasized that consumption habits can vary greatly across countries, requiring tailored, data-driven approaches to measurement. Drawing on Nielsen’s largest brand equity study to date, conducted in collaboration with IPG Mediabrands’ UM, Isselbacher discussed how this expansive effort—spanning over 25 countries and languages, with global sample of more than 150,000 respondents—helped define leading indicator media metrics that drive full-funnel brand equity metrics, ultimately improving higher ROI.

“Robust, well-calibrated data is the backbone for actionable insights that elevate media planning to build brand equity and ROI,” Isselbacher explained, reinforcing the necessity of combining traditional and digital touchpoints to ensure accurate and comprehensive measurement. By leveraging insights from this extensive study, he emphasized the value of data precision and integrated measurement in optimizing campaign success to foster a legacy of strong brand growth while achieving business goals in the near-term.

Attention

I had the pleasure of working with Mars for years at TRA. I greatly respect their meticulous approach to measurement and insistence on including incremental sales in measurement. I also had the pleasure of working with Realeyes last year in the landmark study for Meta with Eye Square. My respect for them is as great based on my experience working together. At the ARF Accelerator the other day Joanna Welch, Global Mars Horizon Comms Lab Associate Director, and Max Kalehoff, Chief Growth Officer at Realeyes, presented the finding that the use of Realeyes new AI-based product leads to a 3% to 5% sales lift. RMT ad-context resonance has shown 36% sales lift according to NCS, and RMT ad-person resonance 95% sales lift per Neustar. The correlation between the Realeyes predictive attention and sales is 0.62, so that the R2would be 0.38. The ARF Cognition Council found that the comparable R2 for RMT is 0.48. The guidance to the buy side would be to combine these two sales impact predictors – attention and resonance – in media selection.

I believe these results. Realeyes is truly predicting the attention of a piece of creative, which enables Mars to not put media money behind some executions. Exclusions of creative that would not gain attention should logically increase the budget behind attention-getting creative. Although neuroscience points out that conscious attention to an ad is not absolutely necessary for the ad to have effect (because of the importance of the subconscious), ads should logically have more effect if they gain attention. If they don’t gain attention, it’s because they don’t resonate with the subconscious motivational drivers in the viewer. If they do gain attention, it’s because the subconscious resonated, and this drove the viewer to pay attention.

Joanna pointed out that with generative AI the number of executions that need to be tested across all of the countries in the world exceeds the scalability of anything other than an AI predictive model, which is what the new Realeyes product is. It’s based on past eye tracking at great scale (17 million observations). It itself is not eye tracking, but an AI model predicting attention. And it works. The AI not only predicts but it also recommends improvements that could increase an ad’s attention-getting power.

Joanna pointed out that before this Realeyes model, Mars was only able to pretest 2% of the creative executions it ran.

One thing I really like about the new Realeyes model is that it is plug-and-play by API. I know that Amplified Intelligence has also been using that approach, and I expect we will all be using it before long.

More AI

Michael True, Co-Founder and CEO of Prescient AI, and his client Cameron Bush, Head of Advertising at HexClad, showed the Prescient AI platform, a marketing mix model (MMM) with Machine Learning (ML) that overcomes the last click bias of traditional MTA and provides daily campaign-level output data that, in terms of the reporting formats and breakouts, looks like both MMM and MTA. (Pioneer modeler Michael Cohen written up in my recent CIMM Cutting Edge ROI study has another proprietary model with that same reporting feature.)

DataPOEM Founder Bharath Gaddam showed their method which uses Pearl’s Causal Ladder focusing on counterfactuals – seeing how the results would change by removing one media element or creative execution.

Steve Cohen, Partner, In4mation Insights, and John Fix, Consultant, John Fix Ltd, showed their new AI product which predicts later touchpoints the consumer will experience with a campaign.

Branding and Sales

Mike Menkes, Vice President of Customer Engagement, Analytic Partners, and his client Laura Guerin, Sr. Director, Consumer Analytics, SharkNinja, showed data proving that brand equity ads outperform product + performance/offer ads in terms of sales – by +73%.

Analytic Partners has been known as an MMM vendor but today they are practicing Commercial Analytics, their term for the integration of diverse metrics, which they also characterize as Nested Funnel Modeling. They are against the present practice called siloed metrics. They showed a grid of media channels and retail channels, and examples of halo effect (an ad for a brand synergistically benefits a sister brand). (Leslie Wood first showed me her analyses of halo effects in TRA days.)

Altogether, the conference upheld the level of excellence we think of when we think of ARF and Sequent. Jim Spaeth and Alice Sylvester are taking down the Sequent shingle and Alice will continue to consult while Jim gets to retire with his beautiful and brilliant family. A happy ending. Hopefully with endless reunions. The media research community contains great love and bonds that will never be severed.

Posted at MediaVillage through the Thought Leadership self-publishing platform.

Click the social buttons to share this story with colleagues and friends.

The opinions expressed here are the author's views and do not necessarily represent the views of MediaVillage.org/MyersBizNet.