ARF Attention Phase 2 Report Overturns Widely Held Assumptions

The Advertising Research Foundation (ARF) has just released the findings of the second phase of its three-phase study of the validity of attention measurement. As you may recall, the first phase was the publication of an Atlas of 29 attention measurement and prediction companies, dissecting differing definitions and methodologies, and containing links to case studies provided by each company.

In the second phase, 12 of these companies participated in an analysis of the degree to which their pretesting of video ads, focusing on attention and in some cases also emotion, provided measurements of the creative that agree with the advertiser’s assessment of the in-market business success of these ads. This was the question: do the attention pretests agree with the actual in-market results in categorizing ads as worthy of running or not?

Phase three which is now beginning will analyze the validity of the use of these varied attentional methods in filtering out low-attention media placements.

The results just released for phase two were shocking to many people. What they show is that attention measures are not the best practice for deciding which ads to run or not run.

In the current industry culture, a vast amount of prior industry learning has been ignored. In that environment, it has been widely assumed that the key thing to measure is whether an ad is going to get attention, and the rest would take care of itself. This assumption has been shattered by the data I’m about to summarize from the ARF phase two report.

After that summary I’ll turn back the clock to reveal what we knew all along – those of us who had not ignored previous industry learning from the ARF. This will show that the phase two results ought not have been so surprising.

Summary of Phase Two Results

- It had been hoped that the 12 different suppliers would exhibit convergent validity – in other words, that the suppliers would tend to agree with each other. However, not much convergence was seen. For one set of 4 ads for one of the test brands, all of which followed the “problem-solution” format, there was a reasonable degree of agreement. Across all 32 ads in the study, however, this was not the case. Two suppliers used survey methods and their results had more convergence than the others. Two others using both eye tracking and facial emotion coding also showed a bit more agreement with each other.

- When second-by-second analysis was compared across the length of each test ad, most suppliers tended to have a “signature” curve pattern that appeared for every ad tested but which was quite a different curve pattern for one supplier vs. another. This suggests instrument bias, i.e. the instrument has more effect on the data than the stimulus does.

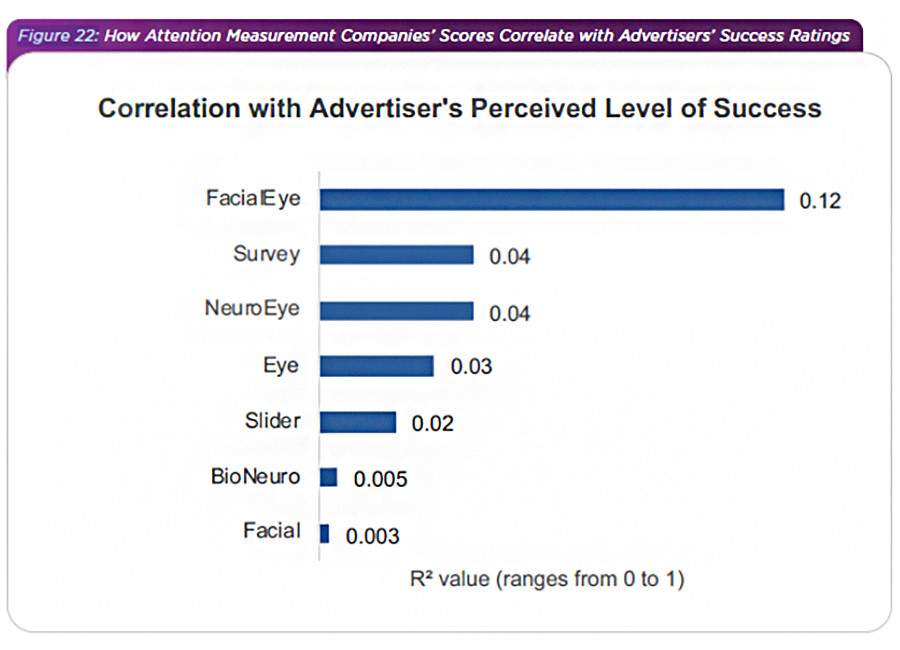

- The advertisers had shared with ARF but not with the attention measurers the degree of marketplace success of each ad, on a four-point scale. The advertisers also shared their Key Performance Indicator (KPI), which in most cases was sales/brand growth, with the ARF and with the suppliers. The R2 between supplier measures and the advertiser’s ground truth was 0.12 for the supplier with the highest score.

- Where sales was the KPI, and the supplier claimed predictivity of sales effect, the R2 were 0.0007 for one supplier and 0.004 for the other supplier. This shows no relationship between attention/emotion and sales effect.

- Previous ARF studies (more about them in a moment) had shown that the most important thing marketers need from ad pretesting is the ability to detect an outstandingly successful ad. Yet none of the suppliers identified the one most successful ad in the study as judged by the advertiser, all suppliers undervalued it between 20% and 70%.

- It was also learned long ago that marketers also need to be able to detect the ads which would be the most wasted money. Yet in the ARF phase two report, all but one supplier overestimated a low-performing ad between +60% and +360%, while the remaining supplier underestimated it by -96%.

The study had many other important conclusions including far higher attention levels for TV vs. digital, a fascinating analysis of humor in ads, and many other subjects which make the actual report a must-read for marketers. ARF Members may retrieve the full report here.

Why The ARF Lessons Of The Past Must Be Learned

What are the implications? First and foremost, advertisers must remember the learnings of the past, and pretest ads using persuasion, liking, recall type measures as well as attention; attention cannot be assumed to be a proxy for all of these things. Attention in itself does not ensure sales effect. Persuasion and ad liking together definitely do ensure sales effect (if there is adequate store distribution, shelf facings, competitive pricing, and sufficient ad spend). And a naïve supplier who thinks that the way you measure persuasion and ad liking doesn’t matter and they can concoct any old way of doing it, that is wrong.

In the 1960s, the ARF Model showed that an ad had to achieve exposure, communication, and persuasion in order to cause incremental sales. The ARF Model was updated in 2003 by Erwin Ephron, Jim Spaeth, Bill Moran, Denman Maroney, Phil Brandon and myself, and one of the things we added was Ad Attentiveness just above Ad Exposure and just below Ad Communication and Ad Persuasion.

In recent times, essentially in the last seven years, Attention has become the widely assumed surrogate for every level in the ARF Model.

In the '80s and into the early '90s, the ARF had conducted the ARF Copy Research Validity Project (CRVP). This was a comparison of 35 different metrics to see which ones had the greatest ability to predict which of two ads for the same brand produced higher sales effect, as measured by Behaviorscan. We recommend that ARF Members ask ARF for the relevant documents that show not only the CRVP report but also the follow-on work that ARF published in its Journal of Advertising Research. Together this body of work establishes that persuasion and ad liking are the two best indicators of likely sales impact of a video ad, and that certain ways of getting at these two dimensions are better than others.

14 of the 35 measures achieved over 90% ability to discern which of two commercials for the same brand would have the higher sales effect. Not a single one of the 35 measures was attention. In the 80s and 90s, attention did not seem to the world’s finest advertising research minds to be worth inclusion in this close-to-a-million-dollar study which took years to complete.

Five of the 14 winning measures were composites based on two separate dimensions orthogonal to one another. This supports the idea of combining attention with some other proven measure of predicting advertising sales effect – for example, the one I invented called RMT Resonance. Happy to bolster the attention suppliers in this way.

Compare the over 90% ability to predict sales effect based on persuasion and ad liking with the generally lower than 12% shown for attention/emotion in the table above from the ARF Phase Two Attention Measurement Validation Study.

What this reminds us is that when pretesting ads, yes include attention, but emphasize the rigor with which you measure persuasion and ad liking as more important than attention. If you measure that way and detect an ad that is high attention but low persuasion and/or ad liking, don’t put too much media investment into that ad.

What shall we see in Phase Three, when attention is applied to media selection?

I think attention will fare noticeably better in that application. As Karen Nelson-Field has said, digital contexts generally limit attention to 1-2 seconds for scrolling feeds and 3-5 seconds for YouTube “Skip in 54321” ads.

This would cause some marketers to shy away from those types of contexts entirely, whereas today the bulk of marketers have collectively invested an annual $600 billion globally in digital, which is mostly in short-attention contexts. Given their over-investment in those situations, it’s understandable that attention has become the tunnel vision winner for today’s marketers, most of whom grew up on social media and don’t quite see that their bias is shortening the lifespan of their brands and perhaps at some point of their own careers.

Cover image source: The Advertising Research Foundation (ARF)

Posted at MediaVillage through the Thought Leadership self-publishing platform.

Click the social buttons to share this story with colleagues and friends.

The opinions expressed here are the author's views and do not necessarily represent the views of MediaVillage.org/MyersBizNet.